6 Phases of AI Project Management

6 Phases of AI Project Management

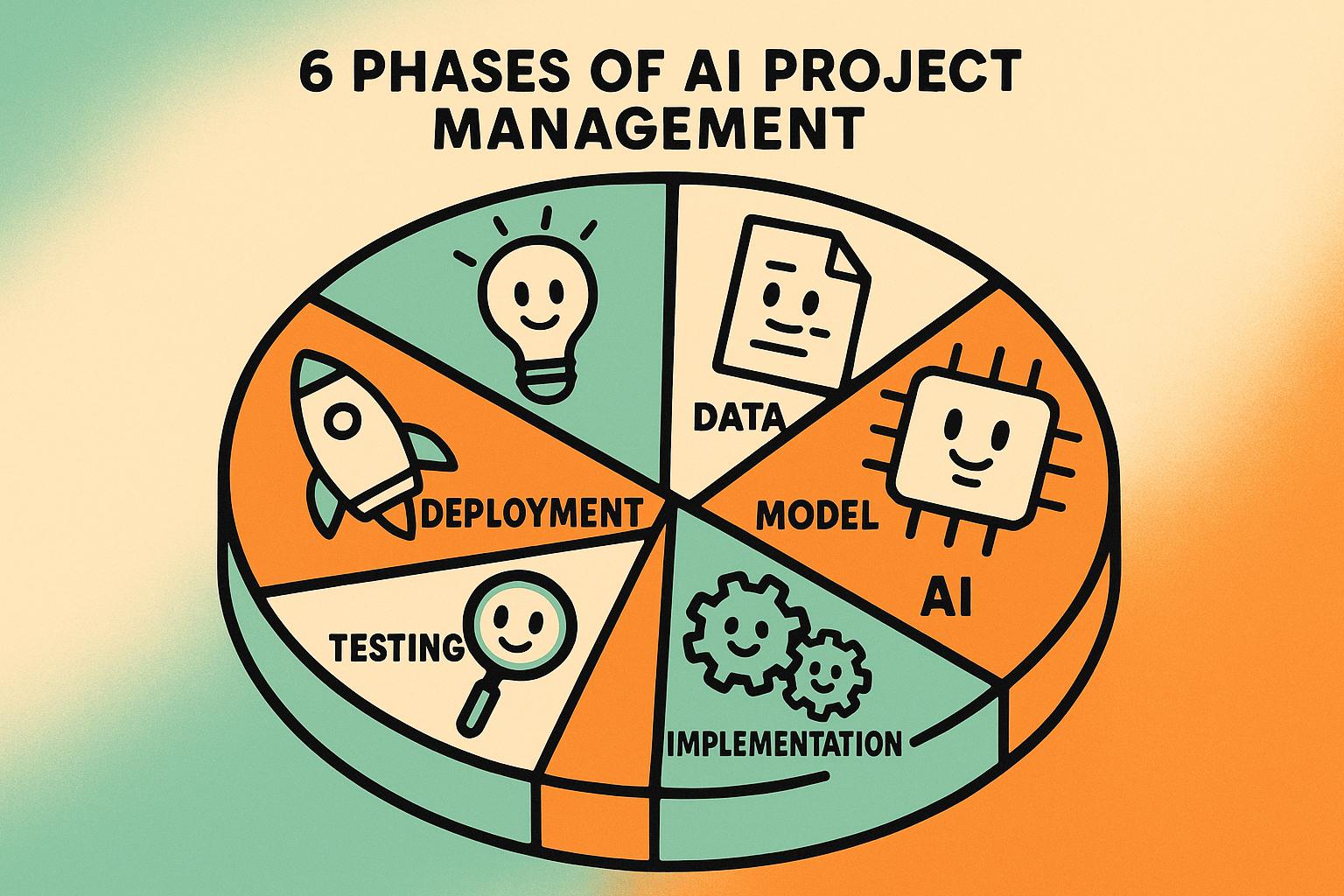

AI projects are complex and unpredictable. A structured approach can help you manage these challenges effectively. Here’s a quick summary of the six phases of AI project management:

- Problem Identification and Assessment: Define the business problem, evaluate readiness across data, infrastructure, team skills, and business alignment. Deliverable: A readiness report.

- Strategy Development and Use Case Selection: Prioritize AI use cases based on feasibility, ROI, and alignment with business goals. Deliverable: A project roadmap.

- Prototyping and Testing: Build proof-of-concept models, test them iteratively, and validate their potential. Deliverable: A working pilot.

- Full Implementation: Scale the pilot into a production-ready system, ensuring integration, testing, and compliance. Deliverable: A production system.

- Scaling and Company-Wide Adoption: Expand the AI solution across departments, standardize processes, and manage change effectively. Deliverable: Multi-department implementation.

- Monitoring and Improvement: Continuously track performance, retrain models, and refine systems to maintain effectiveness. Deliverable: An improvement plan.

This phased framework reduces risks, aligns technical progress with business goals, and ensures long-term success. Each phase builds on the last, creating a clear path from concept to full-scale deployment.

Artificial Intelligence in Project Management: AI and PM Primer

Phase 1: Problem Identification and Assessment

Every successful AI project begins with a clear understanding of the problem and a thorough assessment of organizational readiness. This phase lays the groundwork for everything that follows, setting the tone for either success or failure.

Identifying Business Problems

The foundation of successful AI implementation lies in pinpointing specific challenges where AI can make a measurable impact. It’s not about chasing trends but finding areas where AI can genuinely enhance human capabilities and deliver meaningful results.

For many U.S. businesses, common triggers for considering AI include operational inefficiencies, inconsistent data analysis, and lackluster personalization in marketing efforts. To uncover these challenges, organizations often turn to structured approaches.

Cross-functional workshops are particularly effective. Bringing together product managers, engineers, and subject matter experts, these sessions map out the user journey and identify bottlenecks. This collaborative effort often highlights areas where AI can shine - tasks like pattern recognition, prediction, classification, or automation. For example, a retail company might discover that frequent stockouts and overstocking are costing thousands of dollars each month, pointing to inventory management as a prime candidate for AI solutions.

In addition to workshops, stakeholder interviews and reviews of operational metrics can reveal inefficiencies that might otherwise go unnoticed. The objective is to identify problems where AI can deliver exponential benefits, not just minor improvements that could be achieved through simpler methods.

Once these pain points are identified, the next step is to evaluate whether the organization is ready to undertake a robust AI initiative.

"We know AI can feel overwhelming. Let us guide you with care and expertise, helping you implement AI solutions that work for your business - so you can stay ahead without getting left behind." - AskMiguel.ai

Business Readiness Assessment

After identifying potential AI opportunities, it’s crucial to assess whether your organization is prepared to execute an AI initiative. This evaluation involves four key areas that will determine the success of your project.

- Data maturity: Data is the backbone of any AI project. Start by auditing your data sources, evaluating quality and availability, and reviewing governance practices. Poor data quality is a major reason why AI projects fail, so this step is critical. Ensure your data is clean, well-labeled, and accessible across departments.

- Technical infrastructure: Assess your existing technology stack, cloud readiness, and computational resources. Determine whether your systems can handle the demands of AI models and if they can integrate solutions seamlessly.

- Team capabilities: Evaluate your internal expertise in data science, engineering, and domain knowledge. Many organizations overestimate their capabilities, leading to unrealistic timelines and expectations. Identifying skill gaps early allows you to plan for additional training or external support.

- Business alignment: Ensure leadership is fully on board and that clear objectives are set. Without executive buy-in and defined success metrics, even the most technically sound AI projects can fail to deliver value.

External experts, like those at AskMiguel.ai, can provide valuable insights during this assessment. Their experience across industries and objective perspective can help benchmark your readiness and identify areas needing improvement.

At the end of this evaluation, consolidate your findings into a clear report that will guide the next steps in your AI journey.

Deliverable: Readiness Report

The final output of Phase 1 is a readiness report - a comprehensive document that serves as the foundation for your AI strategy. This report organizes all assessment findings into an actionable format, making it easy for stakeholders to understand and take action.

Key elements of the report include:

- A gap analysis matrix that highlights areas requiring attention before AI implementation. This could involve data integration needs, infrastructure upgrades, or team training requirements. Visual tools like heat maps often make these gaps easier to grasp.

- A prioritized list of AI opportunities, ranking use cases by their potential impact and feasibility. Factors like data quality, technical complexity, ROI potential, and alignment with business goals are considered. High-impact, low-complexity opportunities are typically chosen as pilot projects.

The time needed to complete this phase varies. Small businesses might spend 2-4 weeks, while larger enterprises could require 4-6 weeks for a thorough assessment. Budget-wise, this phase typically accounts for 5-10% of the total AI investment - a small but crucial expense for building a strong foundation.

The report also includes actionable recommendations, such as selecting pilot projects, improving data infrastructure, or addressing skill gaps through training or partnerships. These recommendations act as a roadmap for the next phases of the project.

Research shows that up to 85% of AI projects fail to meet their goals, often due to poor problem definition and lack of alignment in this early stage. A well-structured readiness assessment significantly boosts the chances of success and minimizes costly missteps later on.

Phase 2: Strategy Development and Use Case Selection

Once your readiness assessment is complete, it’s time to turn those insights into a clear, actionable strategy. This phase focuses on narrowing down priorities and selecting specific AI use cases that can deliver measurable benefits. The goal is to create a roadmap that outlines how to implement these use cases effectively.

The trick here is to be both selective and deliberate. Not every opportunity identified in Phase 1 needs immediate attention. The sweet spot lies at the intersection of high business impact and practical implementation.

Selecting Priority Use Cases

Picking the right AI use cases isn’t about guesswork; it’s about using a structured evaluation process. Organizations that succeed often rely on frameworks that assess feasibility, ROI, and alignment with broader business goals.

- Feasibility: This involves reviewing your data quality and technical infrastructure. Even the best ideas will fail without reliable data or the right systems in place.

- ROI: Calculate clear financial projections, including direct savings (like reduced labor costs) and indirect benefits (such as better customer satisfaction).

- Strategic Alignment: Ensure the use case supports your company’s main objectives. For example, automating invoice processing might score well on feasibility and ROI but could be deprioritized if it doesn’t align with key business goals.

In January 2025, Palo Alto Networks used a formal framework to choose use cases for their AI-powered cybersecurity platform. By evaluating feasibility, ROI, and alignment, they prioritized automated threat detection. This resulted in a 40% cut in incident response times and $3.2 million in annual savings. The initiative, led by CTO Nikesh Arora, included stakeholder workshops and KPI setting.

"We build AI systems that multiply human output - not incrementally, exponentially. Our solutions drive measurable growth and lasting competitive advantage." - AskMiguel.ai

Experts like AskMiguel.ai suggest starting with smaller, high-impact pilot projects. These quick wins not only reduce risk but also build confidence in the organization’s ability to harness AI effectively.

Setting Success Metrics

Defining success metrics is critical for tracking progress and justifying your investment. Without clear KPIs, it’s impossible to know whether you’ve hit your goals. Metrics should focus on business outcomes rather than technical achievements.

- Financial Metrics: These include cost savings, revenue growth, and efficiency gains, all measured in dollars.

- Operational Metrics: Metrics like reduced processing times, improved accuracy, or higher customer satisfaction scores complement financial measures.

- Timeline Metrics: Set realistic expectations for when results will materialize. Some benefits might appear quickly, while others could take months.

Budgeting for this phase isn’t just about money - it’s also about assigning the right people and tools. Each use case needs clear ownership, a dedicated team, and access to the necessary resources.

Deliverable: Project Roadmap

The roadmap is where all your planning comes together. It lays out the prioritized use cases, success metrics, and a timeline of milestones, turning your analysis into a step-by-step plan.

Start with use cases that offer high impact but are relatively simple to implement. These pilot projects provide valuable learning experiences and build momentum before tackling more complex initiatives.

- Timeline Planning: Estimate how long each use case will take based on complexity and resource availability. A typical AI pilot might take 3-4 months from data preparation to initial deployment, while more intricate projects could stretch to 6-12 months. Always build in buffer time for unexpected challenges like data quality issues or system integration delays.

- Milestones: Define key checkpoints to evaluate progress. Examples include completing data preparation, finishing model development, and reaching deployment readiness. Each milestone should have clear criteria for success and decision points for whether to proceed, adjust, or pause.

- Risk Management: Identify potential obstacles early, such as poor data quality, technical challenges, or resistance from stakeholders. Include specific plans to address these risks.

In March 2024, AskMiguel.ai worked with a mid-sized U.S. manufacturer to create a roadmap for AI-driven workflow automation. By focusing on high-impact use cases with clear ROI, the company boosted production efficiency by 22% and saved $1.1 million annually. The roadmap included phased implementation and detailed resource allocation.

| Use Case Evaluation Criteria | Description | Example Metric |

|---|---|---|

| Feasibility | Technical and operational readiness | % of data available |

| ROI | Financial benefits | $ savings/year |

| Strategic Alignment | Fit with business priorities | Priority score |

| Ethical/Regulatory Impact | Compliance and bias considerations | Compliance rating |

The roadmap should also include fallback strategies. If a use case hits a roadblock, having alternative approaches ensures progress continues. This flexibility is essential, especially since only about 15% of AI projects reach full deployment and deliver measurable results.

Your roadmap becomes the guiding document for your AI initiative, ensuring everyone understands their roles and responsibilities. It sets the stage for Phase 3, where you’ll begin building and testing your first AI solutions.

Phase 3: Prototyping and Testing

This phase moves the project from planning into action, turning use cases into tangible, testable models. It's a vital step to ensure your AI solution is on the right track before committing significant resources. With 87% of AI projects failing to move into production, often due to insufficient validation, careful prototyping and testing can make or break your project’s success.

Building Proof of Concept Models

The proof of concept (PoC) is your first chance to see if your approach can actually solve the problem at hand. The aim is to build a simple, functional version of your AI solution that delivers measurable results.

Start by assembling a cross-functional team. Include data scientists, engineers, and domain experts who understand the business challenge. This mix of skills ensures your prototype is both technically sound and aligned with real-world needs.

Next, set clear success criteria based on the metrics outlined in Phase 2. For example, if you're working on an AI-driven customer support tool, you might focus on response accuracy or faster resolution times. These benchmarks will guide your efforts and help you evaluate progress.

Data selection is another key step. Use a representative subset of your data to train and test the model. This way, your prototype will encounter scenarios it’s likely to face in production, giving you a realistic sense of its performance.

In 2022, Siemens Healthineers developed a PoC for automated MRI image segmentation. They built the prototype in just eight weeks using agile sprints and tested it on anonymized patient data. The result? A 92% accuracy rate and a deployment across 12 hospitals, where it reduced radiologist workloads by 28% in the first year.

During this phase, experiment with different algorithms and model architectures. If the results aren’t promising, don’t hesitate to pivot. Documenting these experiments will provide valuable insights for future iterations and testing cycles.

Testing with Agile Methods

Agile methodologies bring speed and flexibility to AI development. Instead of spending months building a complete system, you work in short, iterative sprints. This approach allows for continuous feedback and rapid improvements.

Each sprint focuses on a specific aspect, like data preprocessing, model training, or user interface development. This targeted approach helps identify and fix issues early, rather than discovering them after extensive development.

Stakeholder feedback is critical during agile testing. Regular check-ins with business users, technical teams, and decision-makers ensure the prototype stays aligned with business goals. Often, these discussions reveal insights that weren’t obvious during the planning stage.

In 2023, AskMiguel.ai partnered with a logistics company to prototype a route optimization tool. Over four agile sprints, the pilot cut delivery times by 15% and saved $120,000 in fuel costs within three months. Stakeholder input and clear success metrics were key to its eventual adoption across the company.

Agile testing also helps manage the unpredictable nature of AI projects. Machine learning models can behave unexpectedly, and data quality issues may arise. By working in short cycles, you can adapt to these challenges while keeping the project on track.

Testing during sprints should include both technical and business validation. This dual focus ensures you’re not just building a technically impressive model but one that solves the actual problem. Agile methods can reduce time-to-market by up to 30% compared to traditional approaches, giving you a competitive edge while demonstrating value to stakeholders.

Deliverable: Working Pilot

The outcome of this phase is a working pilot - a functional prototype that showcases your AI solution’s potential. While it’s not a polished production system, the pilot should be robust enough for real-world testing.

Key elements of the working pilot include:

- A core AI model that meets the success criteria established earlier.

- Functional, though not fully refined, user interfaces.

- Integration points with existing systems to ensure smooth data flows.

Evaluate the pilot using both technical and business metrics. For example:

| Success Criteria Category | Example Metrics | Measurement Method |

|---|---|---|

| Technical Performance | 90% accuracy, <2 sec response time | Automated testing on a validation dataset |

| Business Impact | 25% reduction in processing time | Before/after comparison with current process |

| User Acceptance | 4.0/5.0 satisfaction score | User surveys and feedback sessions |

Document everything - objectives, methods, outcomes, and lessons learned. Use visual demonstrations and performance dashboards to help stakeholders see both the potential and the limitations of your prototype.

This phase also provides a clearer picture of the resources needed to scale the solution. From computing power to data storage and human expertise, the insights gained here will inform your planning for Phase 4. Additionally, hands-on testing reveals which risks are most pressing and require attention during full implementation.

A successful working pilot not only proves your concept but also lays the groundwork for securing the support and resources needed to move into full-scale deployment in the next phase.

sbb-itb-fc18705

Phase 4: Full Implementation

After validating the pilot in Phase 3, Phase 4 takes the solution to the next level by preparing it for full-scale production. This phase is all about transforming a functional pilot into a production-ready system that aligns with business needs. The challenge lies in ensuring the AI solution integrates smoothly with existing workflows while maintaining the performance demonstrated during earlier stages. Any issues discovered during this phase can significantly impact operations and user adoption, so careful execution is key.

Data Preparation and Model Training

Preparing data for production is much more demanding than it was during the pilot phase. While the prototype may have used clean and representative data subsets, production requires handling the messy, complex reality of real-world business data.

Start by establishing automated processes for data collection and cleaning. Real-world data often contains problems like inconsistencies, missing values, or unexpected formats that may not have appeared in your pilot dataset. Your data pipeline should be equipped to detect and resolve these issues without manual intervention.

Refining features is also critical. Features that worked well in the prototype might need adjustments to perform effectively across the broader range of production data. Document every transformation and use version control to keep track of feature updates.

Security and compliance are non-negotiable when working with production data. If your system handles sensitive customer information, ensure it complies with regulations such as GDPR or CCPA. Regular security audits should become part of your routine processes.

Training models for production involves more than just scaling up the pilot approach. Retrain your models using the full dataset to uncover performance characteristics that may not have been apparent during prototyping. Automate retraining pipelines to keep your models updated as new data comes in. Additionally, optimize performance to handle production-scale data volumes. For example, a system that works efficiently with 10,000 records might struggle with millions, so consider distributed training and fine-tuning algorithms to match your resources.

Once your data pipelines and models are ready, the next step is to integrate the solution into your existing systems.

System Integration and Testing

Integrating your AI solution into legacy systems is a delicate process. It must work seamlessly with databases and workflows that have been refined over years, if not decades.

Design modular APIs to ensure smooth integration with existing systems. This approach reduces the risk of system-wide failures and makes it easier to roll back changes if necessary. Collaboration with IT and security teams is essential from the start, as their knowledge of the current infrastructure is invaluable for a successful integration.

Testing is a cornerstone of this phase. It’s not enough to assume the system will work; you need to verify it. Key testing types include:

- Unit testing: Ensures individual components function as expected.

- Integration testing: Confirms the AI system communicates effectively with existing databases and applications.

- Performance testing: Validates how the system performs under realistic conditions.

- Stress testing: Identifies breaking points under extreme loads.

- User acceptance testing (UAT): Engages end-users to ensure the system meets business requirements and fits into their workflows.

Involving actual users during UAT can uncover usability issues or gaps that technical tests might miss.

For instance, AskMiguel.ai successfully deployed an AI-powered CRM for a logistics company in the U.S. The solution integrated with existing databases, automated lead scoring, and provided real-time insights for sales teams. After thorough testing and user training, the system boosted lead conversion rates by 18% and cut manual data entry by 40%, showcasing the importance of a structured implementation process.

Security and compliance testing also require special attention. Conduct penetration tests to identify vulnerabilities, ensure data encryption is effective, and verify that access controls are functioning properly. These measures help ensure the system is secure and ready for deployment.

Deliverable: Production System

The final outcome of Phase 4 is a fully operational production system, ready for company-wide use. This isn’t just an expanded version of your pilot; it’s a comprehensive, enterprise-grade solution designed to meet the daily demands of your business.

A production system should include robust monitoring and alerting capabilities. Real-time dashboards can track performance metrics like system uptime, model accuracy, and user engagement, while automated alerts allow administrators to address issues quickly. Alongside technical monitoring, prepare detailed documentation and training materials. These should include user guides, administrator manuals, and troubleshooting resources to ensure smooth operation for both end-users and system managers.

Backup and disaster recovery procedures are equally important. Automate backups and clearly document recovery steps to enable rapid restoration of services in case of an issue. Effective change management is also crucial. Develop clear communication plans to explain the benefits of the system, address concerns proactively, and provide multiple feedback channels to reduce resistance and encourage adoption.

With a fully tested and validated production system in place, the next step is Phase 5 - scaling the solution across your organization to ensure widespread adoption.

Phase 5: Scaling and Company-Wide Adoption

Now that your production system is fully validated, it’s time to expand your AI solution across the entire organization. This step - Phase 5 - often consumes a hefty 40–50% of the AI budget, showcasing the complexity of transitioning from a pilot program to a full-scale enterprise rollout. The goal here is to take a successful pilot and integrate it seamlessly into every corner of your business. But this is no small feat. Scaling requires tackling three big challenges: ensuring your infrastructure can handle the increased load, standardizing workflows across departments, and managing the human side of change. The success of this phase determines whether your AI project delivers company-wide impact or remains siloed in one department.

Process Standardization and Optimization

To scale effectively, you need to turn your pilot into a system that’s repeatable and reliable across multiple departments and use cases. This means focusing on infrastructure, API development, and security measures.

Optimizing infrastructure is crucial as user demand grows beyond the pilot stage. Your system must handle peak loads without hiccups, which requires smart cloud resource allocation, load balancing, and redundancy measures. Capacity planning and rigorous load testing can help you avoid outages or performance issues.

Standardized APIs are the backbone of smooth integration across departments. Develop and document APIs that are easy to adopt and maintain. Version control is equally important to avoid compatibility issues as your system evolves.

Strengthening security becomes even more critical during scaling. Implement regular security audits, encrypt sensitive data, and set up strict access controls to protect both your data and AI models. What might have been minor vulnerabilities in the pilot stage can become serious risks at scale.

Data governance also grows more complex as you manage varying data quality and access controls across departments. Clear documentation of data handling procedures, transformation rules, and compliance requirements ensures consistency and accountability.

In 2022, UPS scaled its AI-powered route optimization system, ORION, from a pilot to full deployment across its U.S. operations. This required standardizing data integration processes, extensive driver training, and a well-executed change management campaign. The results? UPS saved over 100 million miles annually and cut fuel consumption by 10 million gallons per year.

Change Management and Team Training

Scaling AI isn’t just a technical challenge - it’s also a people challenge. Gartner reports that 85% of AI projects fall short of their goals, often due to difficulties in scaling and adoption. However, organizations with structured change management and training programs are 2.5 times more likely to scale successfully, according to a 2023 McKinsey survey.

To address employee concerns, emphasize that AI is designed to enhance their work, not replace it.

Training is key and should be tailored to different groups within your organization:

- Executives need a clear understanding of AI’s strategic value and impact on the business.

- End-users require hands-on training to integrate AI into their daily workflows.

- IT teams need in-depth technical knowledge to manage, maintain, and troubleshoot the system.

Offer a mix of training formats - workshops, e-learning modules, and ongoing support resources - to suit different learning styles. Empowering “AI champions” within each department can also accelerate adoption. These individuals act as liaisons between technical teams and end-users, helping to address concerns and build confidence.

Effective communication is another cornerstone. Share early wins, highlight measurable benefits, and provide regular updates on the rollout’s progress. Create multiple feedback channels so users can report issues and offer suggestions, fostering a sense of ownership and collaboration.

In 2023, Procter & Gamble expanded its AI-driven demand forecasting tool from a single product line to its entire North American operations. This rollout included standardized data pipelines, cross-functional training, and a dedicated change management team. Within six months, P&G reduced inventory costs by 20% and improved forecast accuracy by 15%.

Deliverable: Multi-Department Implementation

The ultimate goal of Phase 5 is a fully scaled system that works seamlessly across departments. This involves several key deliverables to ensure sustainable adoption and ongoing success.

Cross-functional adoption metrics are essential for tracking progress. Use a mix of technical and business indicators - such as model performance, uptime, cost savings, and efficiency improvements. User adoption can be measured through metrics like daily active users, feature usage rates, and time-to-proficiency.

A strong monitoring and governance framework is critical for maintaining momentum. This includes automated dashboards, alerts for performance issues, and regular review cycles. Aim for benchmarks such as 70–80% user engagement in the first quarter and satisfaction scores of 4+ out of 5.

Document standard operating procedures (SOPs) to guide teams in using, maintaining, and troubleshooting the AI system. Include training certification programs and detailed technical documentation, such as API specs and integration guides. A lessons-learned report from this phase can also provide valuable insights for future AI initiatives.

These efforts set the stage for the next phase, where long-term monitoring and continuous improvement will help your organization sustain and maximize the value of its AI investment. With Phase 5 complete, your AI solution is no longer just a pilot - it’s a core part of your business.

Phase 6: Monitoring and Improvement

Once your AI solution is fully deployed and scaled across departments, the journey doesn’t end there. Continuous monitoring is crucial to maintain its effectiveness and ensure it keeps delivering value. Over time, data patterns may shift, business priorities can change, and models might drift from their original performance levels. This phase is all about turning your AI solution into a dynamic, evolving asset for your business.

Performance Monitoring and Maintenance

The foundation of effective monitoring lies in tracking the right metrics at the right intervals. A robust monitoring strategy focuses on three core areas: model performance, business impact, and system reliability.

Start by evaluating how well the model is performing. Metrics like accuracy, precision, and recall are essential, but they’re not the whole story. For instance, a customer service chatbot might excel at understanding queries, yet if customer satisfaction drops, it could indicate a gap between technical success and practical value.

Business impact metrics connect AI outcomes directly to your goals. Keep an eye on indicators like ROI, cost savings, user adoption rates, and overall efficiency. Case studies show that regular retraining and monitoring can boost AI system accuracy by 15–30% over time.

Technical metrics, such as accuracy and recall, should be paired with system reliability measures like uptime, response times, and error rates. Automated alerts are a must - set them up to flag any decline in performance so you can act quickly. Additionally, watch for data drift, which occurs when the production data starts differing from the training data. This is a common cause of performance degradation and requires immediate attention.

Plan for regular model retraining, both on a set schedule and whenever performance metrics dip below acceptable thresholds. These practices lay the groundwork for automated management through MLOps.

MLOps for Long-Term Operations

Machine Learning Operations (MLOps) provides the tools and processes to keep AI systems running smoothly at scale. With MLOps, you can automate key tasks like deploying, monitoring, and updating machine learning models.

Automated deployment pipelines and version control ensure updates are both quick and safe. They also allow you to track changes in code, data, and models effectively.

Continuous integration and deployment (CI/CD) processes bring consistency to AI updates. By automating tasks like data validation, model testing, and performance benchmarking, you reduce human error and maintain reliable updates across your organization.

Model versioning and experiment tracking are equally important. These practices create a detailed record of changes and their impact, which is invaluable for troubleshooting and future planning. Infrastructure monitoring ensures you have the computational resources needed to handle scaling. Cloud-based solutions, in particular, provide the flexibility to adjust capacity as your needs evolve.

With automation and monitoring in place, you can formalize these efforts into a structured improvement plan.

Deliverable: Improvement Plan

An improvement plan acts as a roadmap for maintaining and enhancing your AI system over time. This document should include clear review cycles, performance benchmarks, and update procedures to ensure your AI stays aligned with business goals.

Set up regular review schedules that combine technical assessments with business evaluations. Define benchmarks for both technical metrics, like accuracy, and business outcomes, such as cost savings. Also, outline procedures for escalating issues when these benchmarks aren’t met.

Incorporate feedback loops from stakeholders, including end-users, department heads, and customers. Their input can highlight challenges that technical metrics might overlook.

Clearly document criteria for updates, specifying when immediate action is required and when changes can wait for routine cycles. Include approval and rollback procedures for significant updates to avoid disruptions.

As with earlier phases, measurable benchmarks and stakeholder insights are key to driving improvement. Allocate resources for retraining, scaling infrastructure, and dedicating staff time for monitoring. Keep detailed records of what works and what doesn’t, using these lessons to guide future AI projects. Lastly, as regulations and ethical standards evolve, ensure your improvement plan is updated to stay compliant with current best practices.

Conclusion

The six-phase framework turns chaotic AI projects into well-structured, purpose-driven initiatives. Each phase builds on the last, creating a foundation that prioritizes long-term success over quick fixes that crumble under real-world demands.

Why Phased AI Management Works

This step-by-step approach, starting from problem identification and extending to continuous improvement, ensures risk management and collaboration at every stage. Phase 1’s in-depth assessment helps avoid the costly mistake of developing advanced solutions for teams that aren’t ready or for objectives that lack clarity.

The framework truly excels when applied to complex technologies like large language models, which can be unpredictable. Phase 3, dedicated to prototyping and testing, allows teams to explore different models and techniques before committing significant resources to full deployment. This experimental phase minimizes risks by enabling adjustments based on real-world data and user input.

Clear phases also make teamwork more efficient. When data scientists, engineers, and domain experts understand the phase they’re in and the expected outcomes, collaboration becomes smoother. Metrics defined in Phase 2 ensure that technical progress aligns with business goals, creating accountability throughout the project.

Most importantly, this phased approach recognizes that AI projects don’t stop at deployment. Phase 6’s focus on monitoring and improvement ensures that AI systems adapt to evolving business needs and data patterns, preventing them from becoming outdated. This structured method thrives with the right expertise guiding it.

Working with Expert Agencies

To unlock the full potential of this framework, partnering with experienced agencies can make a huge difference. Agencies like AskMiguel.ai bring both technical know-how and practical leadership to AI project management, covering everything from initial scoping to ongoing optimization. This end-to-end approach ensures no phase is overlooked due to resource limitations or knowledge gaps.

What makes expert agencies stand out is their ability to drive exponential growth. Their approach ensures that investments in AI management yield returns far beyond the initial deployment.

"We build AI systems that multiply human output - not incrementally, exponentially. Our solutions drive measurable growth and lasting competitive advantage."

With a network of machine learning specialists, software engineers, and automation experts, agencies like AskMiguel.ai can adapt to the needs of each project. Whether you require data science expertise during prototyping or systems integration during implementation, their flexibility ensures the right skills are available at the right time.

By limiting the number of new clients each month, AskMiguel.ai ensures personalized attention for every project. Their experience with AI-powered CRMs, content summarization tools, and marketing automation translates theoretical frameworks into practical, impactful solutions.

The six-phase framework works because it respects both the complexity of AI technology and the realities of business execution. With expert guidance, this structured method doesn’t just deliver AI systems - it creates lasting competitive advantages, connecting strategic planning to continuous improvement in a way that compounds value over time.

FAQs

What steps should organizations take to prepare their data for AI projects?

To get your data ready for AI, start by evaluating its quality and relevance. Your data needs to be accurate, consistent, and free from errors. Look for any gaps or missing pieces that might impact how well your AI model performs.

The next step is organizing and cleaning your data. This means standardizing formats, getting rid of duplicates, and ensuring everything is labeled correctly. A well-structured dataset makes it easier for AI systems to process and analyze the information effectively.

Lastly, prioritize data security and compliance. Make sure your data practices align with regulations like GDPR or CCPA, and take steps to safeguard sensitive information. Laying this groundwork is key to setting up your AI project for success.

How can businesses effectively manage organizational changes when scaling AI solutions across multiple departments?

Successfully managing change when scaling AI solutions calls for a mix of clear communication, thoughtful planning, and effective leadership. Begin by bringing stakeholders on the same page across all departments. When everyone understands the purpose and benefits of the AI initiative, it’s easier to reduce pushback and build a collaborative environment.

Create a detailed rollout plan that outlines every step, from training employees on new workflows to tackling potential hurdles and tracking progress. Actively gather feedback from teams to quickly address any challenges that arise. Finally, provide consistent support and refine the AI tools as business needs evolve. This approach not only keeps the solutions relevant but also ensures employees remain engaged and confident as changes unfold.

What are the benefits of using a phased approach in AI project management to reduce risks and align with business objectives?

A step-by-step approach to managing AI projects helps simplify complicated tasks into smaller, more achievable stages. This structure ensures teams maintain a clear focus and direction throughout the process. It also gives them the opportunity to spot potential risks early, fine-tune objectives, and adjust strategies when necessary.

By connecting each phase to specific business goals, companies can make sure their AI solutions align with their needs while keeping timelines and budgets on track. This organized method encourages teamwork, reduces expensive mistakes, and ensures the final product delivers the greatest possible value.